Analyzing Neural Models by Freezing Subnetworks

by Kevin Duh, Senior Research Scientist

The SCALE2018 Machine Translation workshop focused on building resilient neural machine translation systems for new domains. In addition to developing new algorithms to improve translation accuracy, the team also dedicated significant efforts to analysis techniques in order to understand when and why neural networks work.

The SCALE2018 Machine Translation workshop focused on building resilient neural machine translation systems for new domains. In addition to developing new algorithms to improve translation accuracy, the team also dedicated significant efforts to analysis techniques in order to understand when and why neural networks work.

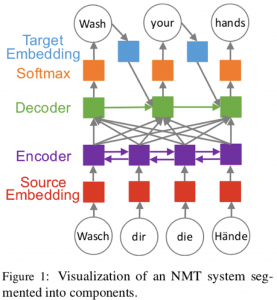

Neural network models, while accurate, are notorious for being difficult to interpret due to their high dimensionality and complex structure. Such difficulty of interpretation can hinder the development of new algorithms and models. To address these challenges, the SCALE2018 team designed an analysis technique termed “freezing subnetworks”.

The technique will be published at the Conference on Machine Translation (WMT2018) in Brussels, Belgium. The idea is to probe the model’s behavior by selectively freezing different components of the overall network during training. This is one of the many research contributions that resulted from the SCALE workshop.